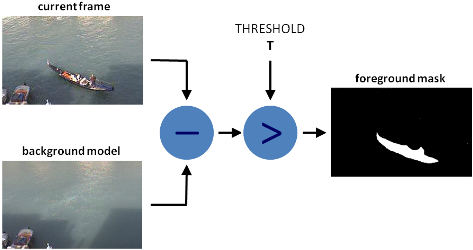

- Background subtraction (BS) is a common and widely used technique for generating a foreground mask (namely, a binary image containing the pixels belonging to moving objects in the scene) by using static cameras.

As the name suggests, BS calculates the foreground mask performing a subtraction between the current frame and a background model, containing the static part of the scene or, more in general, everything that can be considered as background given the characteristics of the observed scene.

Background modeling consists of two main steps:

- Background Initialization;

- Background Update.

In the first step, an initial model of the background is computed, while in the second step that model is updated in order to adapt to possible changes in the scene.

- In this tutorial we will learn how to perform BS by using OpenCV. As input, we will use data coming from the publicly available data set Background Models Challenge (BMC) .

Goals

In this tutorial you will learn how to:

- Read data from videos by using cv::VideoCapture or image sequences by using cv::imread ;

- Create and update the background model by using cv::BackgroundSubtractor class;

- Get and show the foreground mask by using cv::imshow ;

- Save the output by using cv::imwrite to quantitatively evaluate the results.

Code

In the following you can find the source code. We will let the user chose to process either a video file or a sequence of images.

Two different methods are used to generate two foreground masks:

- cv::bgsegm::BackgroundSubtractorMOG

- cv::BackgroundSubtractorMOG2

The results as well as the input data are shown on the screen. The source file can be downloaded here.

#include <stdio.h>

#include <iostream>

#include <sstream>

char keyboard;

void help();

void processVideo(char* videoFilename);

void processImages(char* firstFrameFilename);

void help()

{

cout

<< "--------------------------------------------------------------------------" << endl

<< "This program shows how to use background subtraction methods provided by " << endl

<< " OpenCV. You can process both videos (-vid) and images (-img)." << endl

<< endl

<< "Usage:" << endl

<< "./bg_sub {-vid <video filename>|-img <image filename>}" << endl

<< "for example: ./bg_sub -vid video.avi" << endl

<< "or: ./bg_sub -img /data/images/1.png" << endl

<< "--------------------------------------------------------------------------" << endl

<< endl;

}

int main(int argc, char* argv[])

{

help();

if(argc != 3) {

cerr <<"Incorret input list" << endl;

cerr <<"exiting..." << endl;

return EXIT_FAILURE;

}

if(strcmp(argv[1], "-vid") == 0) {

processVideo(argv[2]);

}

else if(strcmp(argv[1], "-img") == 0) {

processImages(argv[2]);

}

else {

cerr <<"Please, check the input parameters." << endl;

cerr <<"Exiting..." << endl;

return EXIT_FAILURE;

}

return EXIT_SUCCESS;

}

void processVideo(char* videoFilename) {

if(!capture.isOpened()){

cerr << "Unable to open video file: " << videoFilename << endl;

exit(EXIT_FAILURE);

}

keyboard = 0;

while( keyboard != 'q' && keyboard != 27 ){

if(!capture.read(frame)) {

cerr << "Unable to read next frame." << endl;

cerr << "Exiting..." << endl;

exit(EXIT_FAILURE);

}

pMOG2->

apply(frame, fgMaskMOG2);

stringstream ss;

string frameNumberString = ss.str();

imshow(

"FG Mask MOG 2", fgMaskMOG2);

}

capture.release();

}

void processImages(char* fistFrameFilename) {

frame =

imread(fistFrameFilename);

cerr << "Unable to open first image frame: " << fistFrameFilename << endl;

exit(EXIT_FAILURE);

}

string fn(fistFrameFilename);

keyboard = 0;

while( keyboard != 'q' && keyboard != 27 ){

pMOG2->

apply(frame, fgMaskMOG2);

size_t index = fn.find_last_of("/");

if(index == string::npos) {

index = fn.find_last_of("\\");

}

size_t index2 = fn.find_last_of(".");

string prefix = fn.substr(0,index+1);

string suffix = fn.substr(index2);

string frameNumberString = fn.substr(index+1, index2-index-1);

istringstream iss(frameNumberString);

int frameNumber = 0;

iss >> frameNumber;

imshow(

"FG Mask MOG 2", fgMaskMOG2);

ostringstream oss;

oss << (frameNumber + 1);

string nextFrameNumberString = oss.str();

string nextFrameFilename = prefix + nextFrameNumberString + suffix;

frame =

imread(nextFrameFilename);

cerr << "Unable to open image frame: " << nextFrameFilename << endl;

exit(EXIT_FAILURE);

}

fn.assign(nextFrameFilename);

}

}

Explanation

We discuss the main parts of the above code:

- First, three Mat objects are allocated to store the current frame and two foreground masks, obtained by using two different BS algorithms.

Mat frame;

Mat fgMaskMOG;

Mat fgMaskMOG2;

- Two cv::BackgroundSubtractor objects will be used to generate the foreground masks. In this example, default parameters are used, but it is also possible to declare specific parameters in the create function.

Ptr<BackgroundSubtractor> pMOG;

Ptr<BackgroundSubtractor> pMOG2;

...

- The command line arguments are analysed. The user can chose between two options:

- video files (by choosing the option -vid);

- image sequences (by choosing the option -img).

if(strcmp(argv[1], "-vid") == 0) {

processVideo(argv[2]);

}

else if(strcmp(argv[1], "-img") == 0) {

processImages(argv[2]);

}

- Suppose you want to process a video file. The video is read until the end is reached or the user presses the button 'q' or the button 'ESC'.

while( (char)keyboard != 'q' && (char)keyboard != 27 ){

if(!capture.read(frame)) {

cerr << "Unable to read next frame." << endl;

cerr << "Exiting..." << endl;

exit(EXIT_FAILURE);

}

- Every frame is used both for calculating the foreground mask and for updating the background. If you want to change the learning rate used for updating the background model, it is possible to set a specific learning rate by passing a third parameter to the 'apply' method.

pMOG->apply(frame, fgMaskMOG);

pMOG2->apply(frame, fgMaskMOG2);

- The current frame number can be extracted from the cv::VideoCapture object and stamped in the top left corner of the current frame. A white rectangle is used to highlight the black colored frame number.

stringstream ss;

string frameNumberString = ss.str();

- We are ready to show the current input frame and the results.

imshow(

"FG Mask MOG", fgMaskMOG);

imshow(

"FG Mask MOG 2", fgMaskMOG2);

- The same operations listed above can be performed using a sequence of images as input. The processImage function is called and, instead of using a cv::VideoCapture object, the images are read by using cv::imread , after individuating the correct path for the next frame to read.

frame =

imread(fistFrameFilename);

if(!frame.data){

cerr << "Unable to open first image frame: " << fistFrameFilename << endl;

exit(EXIT_FAILURE);

}

...

ostringstream oss;

oss << (frameNumber + 1);

string nextFrameNumberString = oss.str();

string nextFrameFilename = prefix + nextFrameNumberString + suffix;

frame =

imread(nextFrameFilename);

if(!frame.data){

cerr << "Unable to open image frame: " << nextFrameFilename << endl;

exit(EXIT_FAILURE);

}

fn.assign(nextFrameFilename);

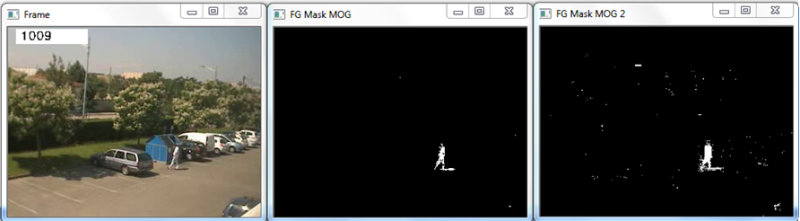

Results

Given the following input parameters:

The output of the program will look as the following:

- The video file Video_001.avi is part of the Background Models Challenge (BMC) data set and it can be downloaded from the following link Video_001 (about 32 MB).

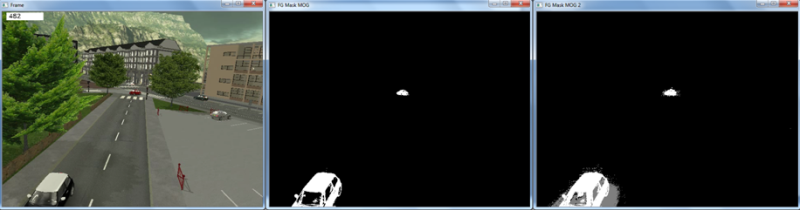

If you want to process a sequence of images, then the '-img' option has to be chosen:

The output of the program will look as the following:

- The sequence of images used in this example is part of the Background Models Challenge (BMC) dataset and it can be downloaded from the following link sequence 111 (about 708 MB). Please, note that this example works only on sequences in which the filename format is <n>.png, where n is the frame number (e.g., 7.png).

Evaluation

To quantitatively evaluate the results obtained, we need to:

- Save the output images;

- Have the ground truth images for the chosen sequence.

In order to save the output images, we can use cv::imwrite . Adding the following code allows for saving the foreground masks.

string imageToSave = "output_MOG_" + frameNumberString + ".png";

bool saved =

imwrite(imageToSave, fgMaskMOG);

if(!saved) {

cerr << "Unable to save " << imageToSave << endl;

}

Once we have collected the result images, we can compare them with the ground truth data. There exist several publicly available sequences for background subtraction that come with ground truth data. If you decide to use the Background Models Challenge (BMC), then the result images can be used as input for the BMC Wizard. The wizard can compute different measures about the accuracy of the results.

References

1.8.13

1.8.13