Goal

In this tutorial you will learn how to:

Theory

What is an Affine Transformation?

- A transformation that can be expressed in the form of a matrix multiplication (linear transformation) followed by a vector addition (translation).

From the above, we can use an Affine Transformation to express:

- Rotations (linear transformation)

- Translations (vector addition)

- Scale operations (linear transformation)

you can see that, in essence, an Affine Transformation represents a relation between two images.

The usual way to represent an Affine Transformation is by using a \(2 \times 3\) matrix.

\[ A = \begin{bmatrix} a_{00} & a_{01} \\ a_{10} & a_{11} \end{bmatrix}_{2 \times 2} B = \begin{bmatrix} b_{00} \\ b_{10} \end{bmatrix}_{2 \times 1} \]

\[ M = \begin{bmatrix} A & B \end{bmatrix} = \begin{bmatrix} a_{00} & a_{01} & b_{00} \\ a_{10} & a_{11} & b_{10} \end{bmatrix}_{2 \times 3} \]

Considering that we want to transform a 2D vector \(X = \begin{bmatrix}x \\ y\end{bmatrix}\) by using \(A\) and \(B\), we can do the same with:

\(T = A \cdot \begin{bmatrix}x \\ y\end{bmatrix} + B\) or \(T = M \cdot [x, y, 1]^{T}\)

\[T = \begin{bmatrix} a_{00}x + a_{01}y + b_{00} \\ a_{10}x + a_{11}y + b_{10} \end{bmatrix}\]

How do we get an Affine Transformation?

- We mentioned that an Affine Transformation is basically a relation between two images. The information about this relation can come, roughly, in two ways:

- We know both \(X\) and T and we also know that they are related. Then our task is to find \(M\)

- We know \(M\) and \(X\). To obtain \(T\) we only need to apply \(T = M \cdot X\). Our information for \(M\) may be explicit (i.e. have the 2-by-3 matrix) or it can come as a geometric relation between points.

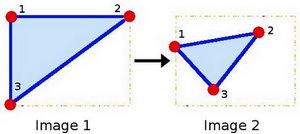

Let's explain this in a better way (b). Since \(M\) relates 2 images, we can analyze the simplest case in which it relates three points in both images. Look at the figure below:

the points 1, 2 and 3 (forming a triangle in image 1) are mapped into image 2, still forming a triangle, but now they have changed notoriously. If we find the Affine Transformation with these 3 points (you can choose them as you like), then we can apply this found relation to all the pixels in an image.

Code

- What does this program do?

- Loads an image

- Applies an Affine Transform to the image. This transform is obtained from the relation between three points. We use the function cv::warpAffine for that purpose.

- Applies a Rotation to the image after being transformed. This rotation is with respect to the image center

- Waits until the user exits the program

- The tutorial's code is shown below. You can also download it here here

#include <iostream>

const char* source_window = "Source image";

const char* warp_window = "Warp";

const char* warp_rotate_window = "Warp + Rotate";

int main( int, char** argv )

{

Mat src, warp_dst, warp_rotate_dst;

double angle = -50.0;

double scale = 0.6;

imshow( warp_window, warp_dst );

imshow( warp_rotate_window, warp_rotate_dst );

return 0;

}

Explanation

- Declare some variables we will use, such as the matrices to store our results and 2 arrays of points to store the 2D points that define our Affine Transform.

Mat src, warp_dst, warp_rotate_dst;

- Load an image:

- Initialize the destination image as having the same size and type as the source:

warp_dst = Mat::zeros( src.rows, src.cols, src.type() );

- Affine Transform: As we explained in lines above, we need two sets of 3 points to derive the affine transform relation. Have a look:

srcTri[1] =

Point2f( src.cols - 1, 0 );

srcTri[2] =

Point2f( 0, src.rows - 1 );

dstTri[0] =

Point2f( src.cols*0.0, src.rows*0.33 );

dstTri[1] =

Point2f( src.cols*0.85, src.rows*0.25 );

dstTri[2] =

Point2f( src.cols*0.15, src.rows*0.7 );

- Armed with both sets of points, we calculate the Affine Transform by using OpenCV function cv::getAffineTransform : We get a \(2 \times 3\) matrix as an output (in this case warp_mat)

We then apply the Affine Transform just found to the src image

warpAffine( src, warp_dst, warp_mat, warp_dst.size() );

with the following arguments:

- src: Input image

- warp_dst: Output image

- warp_mat: Affine transform

- warp_dst.size(): The desired size of the output image

We just got our first transformed image! We will display it in one bit. Before that, we also want to rotate it...

Rotate: To rotate an image, we need to know two things:

- The center with respect to which the image will rotate

- The angle to be rotated. In OpenCV a positive angle is counter-clockwise

- Optional: A scale factor

We define these parameters with the following snippet:

Point center =

Point( warp_dst.cols/2, warp_dst.rows/2 );

double angle = -50.0;

double scale = 0.6;

- We generate the rotation matrix with the OpenCV function cv::getRotationMatrix2D , which returns a \(2 \times 3\) matrix (in this case rot_mat)

- We now apply the found rotation to the output of our previous Transformation.

warpAffine( warp_dst, warp_rotate_dst, rot_mat, warp_dst.size() );

- Finally, we display our results in two windows plus the original image for good measure:

imshow( warp_window, warp_dst );

imshow( warp_rotate_window, warp_rotate_dst );

- We just have to wait until the user exits the program

Result

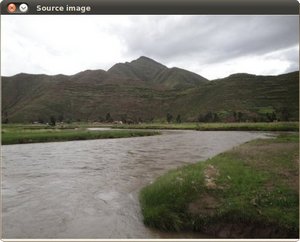

After compiling the code above, we can give it the path of an image as argument. For instance, for a picture like:

after applying the first Affine Transform we obtain:

and finally, after applying a negative rotation (remember negative means clockwise) and a scale factor, we get:

1.8.13

1.8.13